Architecture & Hardware Guide¶

XCore is a multicore microprocessor which enables highly flexible and responsive I/O, whilst delivering high performance for applications. The architecture enables a programming model where many simple tasks run concurrently and communicate using hardware supported transactions. Multiple XCore processors can be ‘networked’, and tasks running on separate physical processors can communicate seamlessly. To utilise the platform effectively it is helpful to understand the hardware model; the purpose of this document is to provide an overview of the platform and its features, and an introduction to utilising them using the C programming language.

Quick start¶

The XCore architecture facilitates scaling of applications over multiple physical packages in order to provide high performance as well as low-latency I/O. A complete XCore application targets an ‘XCore Network’ and potentially consists of several independent applications which communicate using features of the XCore hardware.

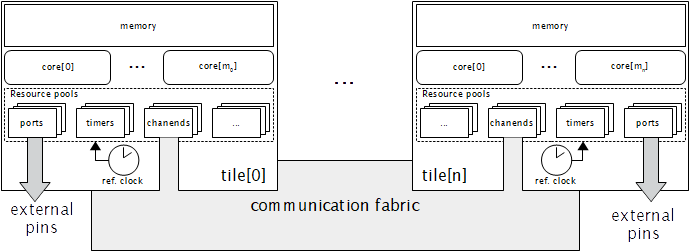

An XCore network is made up of one or more packages; these are connected by the xCONNECT interconnect to allow high-speed, hardware-assisted communication.

Each package contains one or more tiles plus communication fabric which allows communication within and between tiles.

Each tile contains one or more cores, some memory, a reference clock, and a variety of resources.

Each core acts like a hardware thread - it shares access to the tile’s memory and resources with other cores, but all cores execute code concurrently and independently of each other.

Resources act like co-processors - they can be allocated by any core on the same tile and are used to hardware-accelerate various common tasks. For example, port resources facilitate flexible GPIO and timer resources can be used to measure time with respect to the reference clock.

Nodes¶

Each physical package is referred to as a node. A network can be a single node, or two or more packages can be connected such that they may communicate using the xCONNECT infrastructure. Each node has its own external pins, and contains one or more tiles, plus some communication fabric to allow communication between those tiles and with other nodes on the network (if any).

Tiles¶

Tiles are individual processors contained within nodes; each tile has its own memory, I/O subsystem, reference clock and other resources. Tiles within a node communicate using the communication fabric contained within that node, but can communicate with tiles in other packages using the external links.

Cores¶

Each tile has one or more cores, which are essentially logical processors. Each core has its own registers and executes instructions independently of other cores. However, all cores within a tile share access to that tile’s resources and memory. Cores within a tile contend for compute time, however there is more processing capability available than can be utilised by a single core. This means that multiple (but not all) cores can run at full speed. If more cores are running than can run at full speed, the XCore scheduler schedules them round-robin on a per-cycle basis, such that all cores make progress. As each core has its own registers, there is no context switching overhead. Any core which is in a paused state (e.g. waiting for an event) is de-scheduled, which may allow other cores to be scheduled more.

I/O and Pooled Resources¶

Resources are shared between all cores in a tile. Many types of resource are available, and the exact types and numbers of resources vary between devices. Resources are general-purpose peripherals which help to accelerate real-time tasks and efficient software implementations of higher-level peripherals e.g. UART. Many of the available resource types are described in later sections. Due to the diverse nature of resources, their interfaces vary somewhat. However, most resources have some or all of the following traits:

Pooled - the XCore tile maintains a pool of the resource type - a core can allocate a resource from the pool and free it when no longer required.

Input/Output - values can be read from and/or written to the resource (e.g. the value of a group of external pins).

Event-raising - the resource can generate events when a condition occurs (on input resources, this will indicate that data is available to be read). Events can wake a core from a paused state.

Configurable triggers - some ‘event-raising’ resources can be configured to generate events under programmable conditions.

Though available resources vary, all tiles have a number of common resource types:

Ports - These provide input from and output to the physical pins attached to the tile. Ports are highly configurable and can automatically shift data in and out as well as generate events on reading certain values. As they have a fixed mapping to physical pins, ports are allocated explicitly (rather than from a pool), and have fixed widths which can be 1, 4, 8, 16 or 32 bits.

Clock Blocks - Configurable clocks for controlling the rate at which a port shifts in/out data. These can divide the refence clock or be driven by a single-bit port.

Timers - Provide a means of measuring time as well as generating events at fixed times in the future - this can be used to implement very precise delays.

Chanends - An endpoint for communicating over the network fabric. A chanend (short for ‘channel end’) can communicate with any other chanend in the network (whether on the same tile, or on a different physical node).

Communication Fabric¶

The communication fabric is a physical link between chanends within a network, which allows any chanend to send data to any other chanend. When one chanend first sends data to another, a path through the network is established and a link opened. This link persists until closed explicitly (usually as part of a transaction) and handles all traffic from the sender to the received during that time. Links are directed; so if chanend A sends data to chanend B, and then (without the link being closed) B sends data back to A, two links will be opened. These two links will not necessarily take the same route through the network. The communication capacity between chanends within a single node is always enough for at least two links to be open. Between nodes, the capacity depends on the number of physical links which are connected.